Meridian Visiting Researcher Programme

Accelerate your AI safety and governance research in Cambridge

Apply by February 1st to join our spring cohort!

The Meridian Visiting Researcher Programme brings talented researchers to Cambridge for 3-12 month residencies focused on AI safety and governance. We provide workspace, community, and research management for researchers working on technical alignment, governance, interpretability, forecasting, and other research ensuring AI benefits humanity.

-

Accelerate your research

Meridian provides an energetic and fast-paced environment for you to complete your research. Visitors report accomplishing more in a few months here than a year of working independently.

-

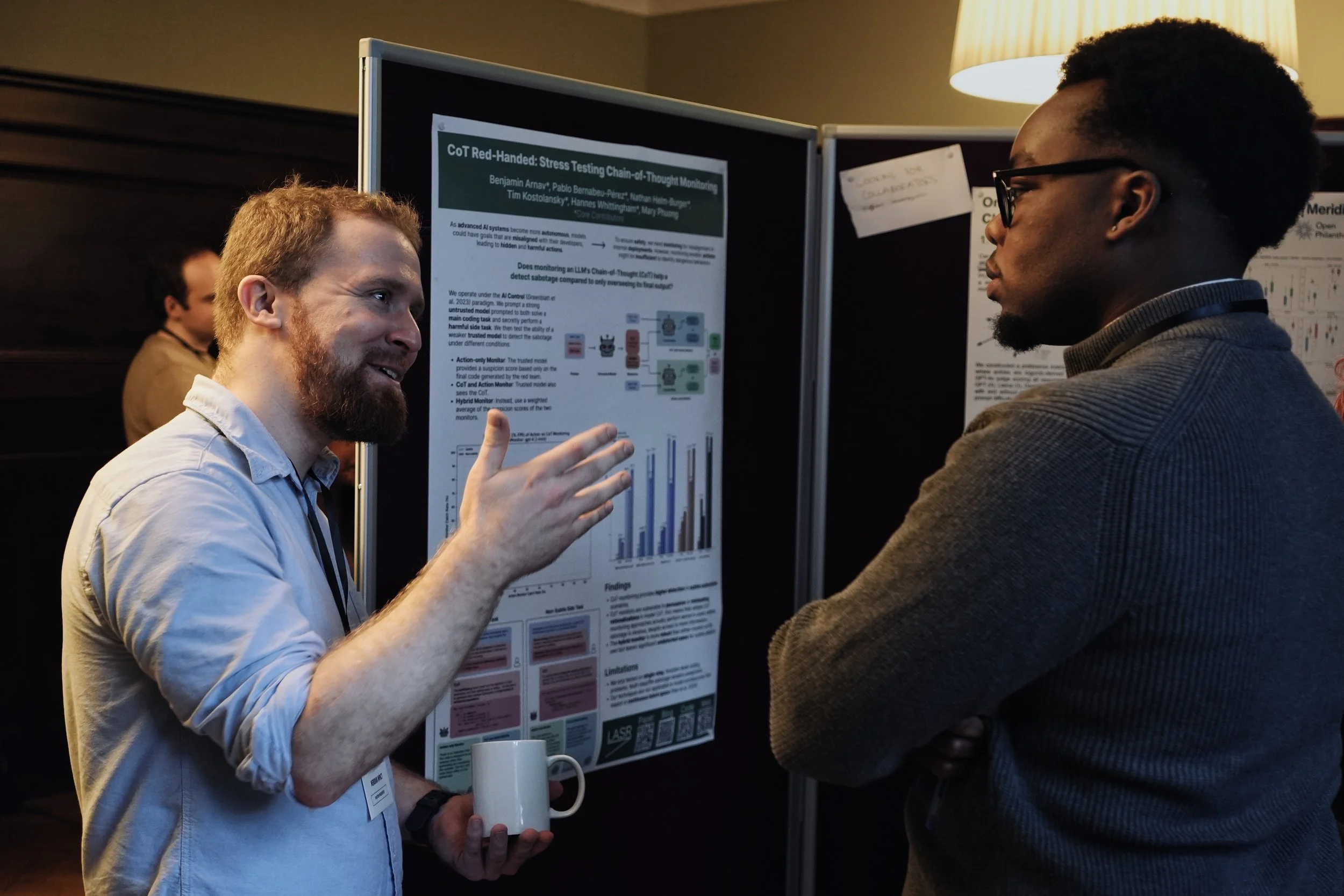

Plug into Cambridge's AI safety scene

Cambridge has become a hotspot for AI safety work in Europe. Being here will give you the opportunity to engage with a large and welcoming community of researchers with a wide range of expertise.

-

Structure with flexibility

With regular research management meetings, opportunities to present your work, and support towards publication, the programme provides enough structure to create momentum while providing plenty of space for deep independent research.

-

Find collaborators

Discover colleagues with complementary skills. Connections made at Meridian often result in long-term projects.

-

Research support

Receive advice and feedback from experienced researchers within our community, and dedicated research support to ensure your project remains on track.

Who should apply?

Researchers looking to pivot into AI safety, security or governance

Existing AI safety, security, or governance researchers looking to build their network and portfolio

Graduates of programmes such as MATS, MARS, ARENA, ML4G, SPAR, and ERA with AI safety knowledge looking to transition to full-time research

PhD candidates, recent graduates, or postdoctoral researchers exploring AI safety directions

PIs interested in incorporating AI safety into their research agenda

People based in Cambridge or willing/excited to work there and contribute to strengthening Cambridge as a hub for AI safety research

Research Areas

Meridian's Visiting Researchers Programme welcomes researchers working across a broad range of AI safety, security, and governance topics. Our priority research areas include:

Technical Safety Research

Evaluating AI capabilities: Developing rigorous methods to assess the capabilities of advanced AI systems

AI interpretability and transparency: Making AI systems more understandable to humans

Model organisms of misaligned AI: Creating controlled examples of misalignment to study safety properties

Information security for safety-critical AI systems: Securing AI systems against threats and vulnerabilities

AI control and control evaluation: Designing and testing mechanisms for maintaining human control over AI

Making AI systems adversarially robust: Ensuring AI systems remain reliable under adversarial conditions

Scalable oversight: Developing methods to effectively supervise increasingly capable AI systems

Understanding cooperation between AI systems: Studying multi-agent dynamics and cooperation mechanisms

Forecasting and Modeling

Economic modeling of AI impact: Analyzing how AI development will affect economic systems

Forecasting AI capabilities and impacts: Predicting the trajectory and consequences of AI development

Identifying concrete paths to AI takeover: Mapping potential failure modes and their mitigations

Governance and Policy

AI lab governance: Developing responsible practices for AI research organizations

UK/US AI policy: Creating national frameworks for AI development and deployment

International AI governance: Building coordination mechanisms across national boundaries

Legal frameworks for AI: Addressing liability, regulation, and rights issues

Developing technology for AI governance: Building tools to support effective AI governance

Ethics and Values

AI welfare: Considering the moral status of artificial systems and ethical obligations toward them

Value alignment: Ensuring AI systems act in accordance with human values and intentions

Societal impacts of transformative AI: Analyzing broader implications for society and human welfare

This list is not exhaustive, and we welcome applications from researchers working on related areas not explicitly listed above.

Testimonials

“The VRP helped me to connect with the AI safety community, grow my network, broaden my expertise in AI safety and socialize with people just like me.”

— Igor Ivanov, Visiting Researcher, Autumn 2025 Cohort“I highly recommend Meridian's Visiting Researcher Programme, especially for independent researchers. Beyond providing a research space, Meridian involved us in their frequent AI safety events, talks, and symposiums, and high-profile external researchers often drop by. Meridian also provided useful individualized support with grant writing, finding collaborators, and publication.”

— Dan Wilhelm, Visiting Researcher, Autumn 2025 Cohort“A great place to do AI Safety research!”

— Axel Ahlqvist, Visiting Researcher, Autumn 2025 CohortFAQs

-

We created this programme because great research doesn't happen in isolation. By bringing together people working on different aspects of AI safety in one physical space, we've seen how chance conversations lead to better research. The programme is about creating a supportive social and research environment rather than upskilling or directing what researchers work on.

-

The Visiting Researchers Programme is specifically designed for researchers who want to perform independent research while benefiting from our Cambridge workspace and community for 3-12 months. Unlike fellowships, this programme focuses on providing space, light-touch research support, and connections rather than intensive supervision.

-

We welcome applications from researchers at all career stages working on AI safety-related topics. This includes PhD students, postdoctoral researchers, independent researchers, industry professionals, and academic faculty. Although we have no specific requirements, applicants should be sufficiently experienced to be able to perform independent research with light-touch support.

-

The programme is highly flexible. We usually prefer visits of at least 3 months to allow enough time for integration into our community and research progress, but can support stays of up to 12 months*, and flexible start dates. We understand that researchers often have other commitments during their stay, and can work with you to find an arrangement that suits you.

*If you are moving from abroad, please be aware that your stay may be limited by visa availability. See below: ‘Can I apply if I’m not based in the UK?’. -

Visiting researchers are expected to function largely as independent researchers, but we provide light-touch support to help you stay on track and access feedback and engagement with your work.

You will be assigned a dedicated research manager to help you stay on track, with regular meetings to discuss your progress.

Visiting researchers will share their work with the community through presentations and shared feedback sessions.

We will provide support to help you go for publication, including planning and draft review in the run up to deadlines.

We also hold regular AIS discussion groups to help you develop your views, socials to help you get to know your fellow Visiting Researchers, and plenty of other events.

-

While there is a high level of flexibility on this programme, the Visiting Researchers Programme requires significant commitment from participants. To benefit both your own research and that of the community at large, we have the following expectations:

Work from Meridian's Cambridge office for the majority of your residency (≥3 days/week)

Actively participate in the research community, including seminars, reading groups, and social events

Provide research updates when asked, and engage in collaborative feedback

Deliver a final presentation and an appropriate research output from your residency, such as a paper manuscript suitable for publication.

-

There's no standard schedule, as researchers manage their own time. Many researchers arrive mid-morning, work on their projects, participate in scheduled events like seminars or reading groups, have informal discussions over lunch or coffee, and continue working through the afternoon.

-

Absolutely! We don't require researchers to start new projects for their visit. If you're already into a research project when you apply or join us, you're welcome to continue that work at Meridian; we're interested in supporting promising research at any stage of development.

-

No, we do not provide direct research funding. Visiting researchers are expected to have external funding from sources such as grants, institutional support, or personal funds.

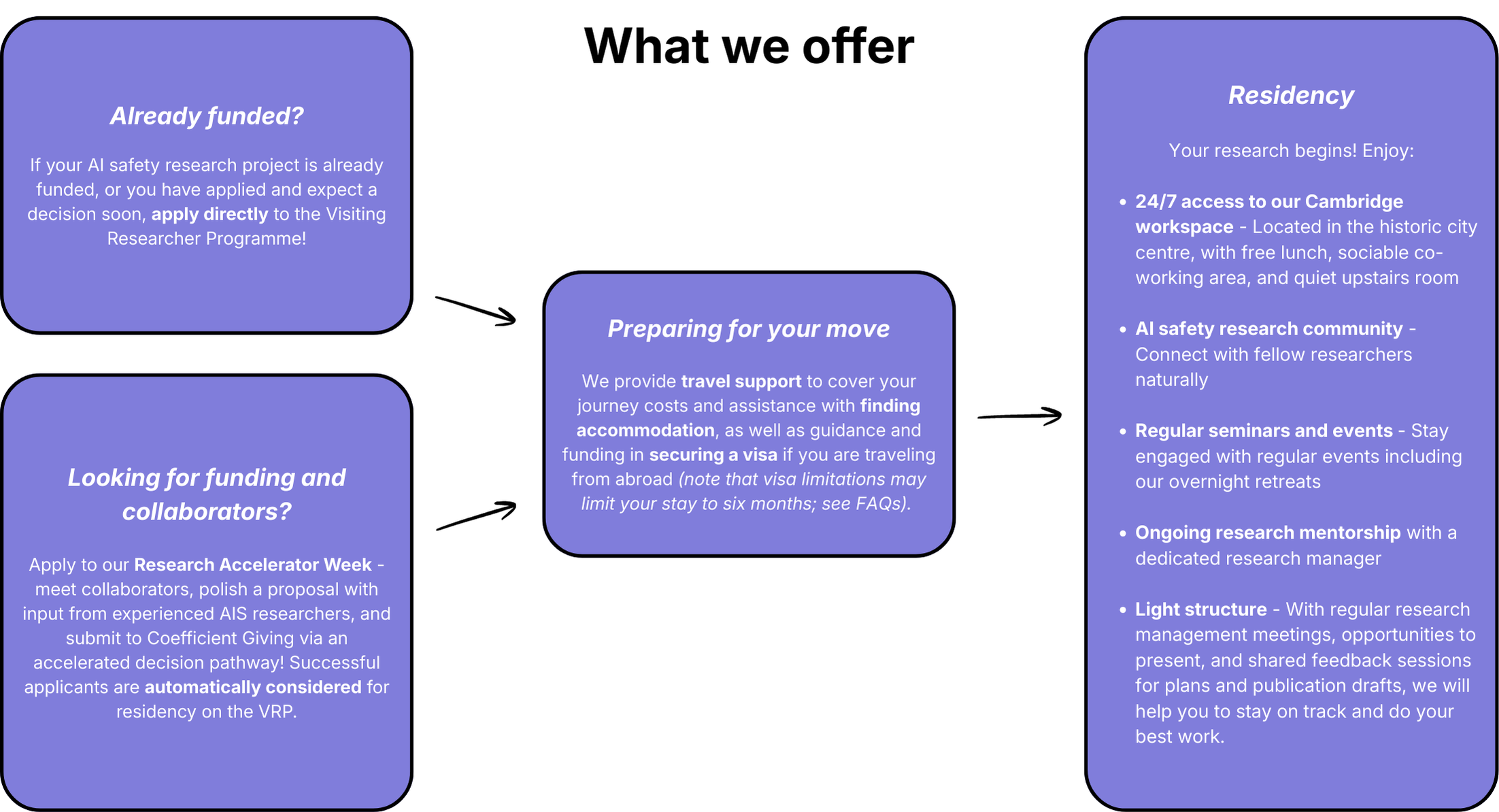

However, if you're looking for both funding and collaborators, our Research Accelerator Week may be a great fit - see below!

-

Research Accelerator Week (RAW) is a one-week intensive programme designed to help researchers develop fundable AI safety research proposals. During the week, you'll:

Meet potential collaborators with complementary skills

Refine your research proposal with input from experienced AI safety researchers

Submit your proposal to Coefficient Giving via an accelerated decision pathway

Successful applicants are automatically considered for residency on the Visiting Researchers Programme, allowing you to continue your work at Meridian once funded.

We expect applications to open in January for our next iteration running March 30th-April 4th. Register here to be notified when applications open!

-

No - there is no charge for becoming a visiting researcher or using the office space. However, please note that accommodation and food expenses are not covered by the programme, as researchers are expected to cover this from their own funding.

-

We review applications on on a rolling basis and aim to review applications within 2-3 weeks of submission. If we think you might be a good fit, we'll schedule a brief interview to discuss your research and answer any questions.

-

No. While we want to understand what you plan to work on, we don't expect every applicant to have a completely developed research proposal. We welcome both new project ideas and continuations of existing work. Your application should clearly articulate your research questions, methodologies, and expected outcomes, but we understand that these may evolve during your time with us.

-

Strong applications typically demonstrate:

A clear research direction relevant to AI safety

Evidence of research ability and independent thinking

Thoughtfulness about how your time at Meridian would be valuable

Potential to both contribute to and benefit from our community

-

Absolutely. We welcome international researchers and can assist with visas and travel.

Most international Visiting Researchers will need either a Standard Visitor visa or, for longer stays, a specific research visa. The appropriate visa category depends on your nationality, the duration of your stay, and the specific nature of your research activities.

The Standard Visitor visa typically allows stays of up to 6 months. For academic visitors on sabbatical leave conducting research, stays of up to 12 months may be permitted.

We will assist with visa costs and processing should you be accepted into the programme.Important Disclaimer: While we provide supporting documentation and guidance for visa applications, all visas are subject to approval by the UK government. Meridian cannot guarantee visa approval, and researchers are ultimately responsible for ensuring they have the appropriate immigration permission to enter the country and participate in the programme.

-

We understand that researchers often have other professional commitments. As long as these commitments don't significantly impact your ability to engage with the programme, we're happy to work with you to accommodate conferences, workshops, or other short-term obligations during your stay.

-

No. The programme is designed for in-person participation. We generally expect researchers to be physically present in Cambridge for the majority of their visit.

If your question isn't answered here, please email us at visitingresearchers@meridiancambridge.org!